Based on the title, it should be obvious that this isn’t the updated talk on my multithreaded renderer I mentioned last post. That whole write up is still in the works, but today I thought I’d jump into D3D Shader Reflection, and how it both great and worth implementing, and completely ruined by it’s limitations.

So, my shader manager has undergone very little change since I first implemented it last year for Evac. It’s a very simple class, just designed to hold all of my compiled shaders, keep track of the last shader used, and allow materials to switch shaders on demand. Very bare bones, but very functional. The biggest problem with it has been that I have to manually do too many things when I want to add a new shader; I have to write the HLSL, I have to add an entry to my shaders enum, I have to write a new C++ class for the shader, and then I have to update the shader manager since each shader having it’s own class means I have to explicitly create and destruct the new types. Ideally, I should only need to write the HLSL and update the enum, and everything else could happen automatically.

When I was rebuilding my framework this year, this was an area I investigated for improvement. I was able to refactor my shader class code so that 95% of it was generic and capable of being a single class that could service all my compiled shaders. The problem was the input layout generation. Since that was something that had to be defined per vertex fragment (or at least, unique vertex input struct), I had to have a way to generate this properly for each shader, and it lead to still having a C++ class per shader even if 95% of it was copy/paste and the only unique code between them was the input layout declaration. An improvement to be sure, but not nearly enough of one.

Now, I’ve been aware of, and interested by, the shader reflection system provided by D3D for a while, but I’ve always considered the time commitment to research, implement, and fix to not be worth it when I already had a working, albeit slightly tedious, shader system. This week finally tipped the scales because I found myself avoiding trying to implement something via a new shader because I didn’t want to go through the hassle of the whole process if I didn’t have to. So, I took the plunge.

Before I get into the source code, there are two things worth sharing. The first is that I am using the shader reflection system solely to generate my input layout from my HLSL in an effort to create a single, generic shader class; the entirety of the system is very powerful and can do a lot more than the small subsection that I’m discussing here. The second is that I took the basis of my implementation from this post by Bobby Anguelov, and it probably is worth a read if this is interesting to you at all. With that said, here’s the function I wrote that generates my input layouts:

void CompiledShader::CreateVertexInputLayout(ID3D11Device* pDevice, ShaderBytecode* pBytecode, const char* pFileName)

{

ID3D11ShaderReflection* lVertexShaderReflection = nullptr;

if (FAILED(D3DReflect(pBytecode->bytecode, pBytecode->size, IID_ID3D11ShaderReflection, (void**) &lVertexShaderReflection)))

{

return;

}

D3D11_SHADER_DESC lShaderDesc;

lVertexShaderReflection->GetDesc(&lShaderDesc);

std::ifstream lStream;

lStream.open(pFileName, std::ios_base::binary);

bool lStreamIsGood = lStream.is_open();

unsigned lLastInputSlot = 900;

std::vector<D3D11_INPUT_ELEMENT_DESC> lInputLayoutDesc;

for (unsigned lI = 0; lI < lShaderDesc.InputParameters; lI++)

{

D3D11_SIGNATURE_PARAMETER_DESC lParamDesc;

lVertexShaderReflection->GetInputParameterDesc(lI, &lParamDesc);

D3D11_INPUT_ELEMENT_DESC lElementDesc;

lElementDesc.SemanticName = lParamDesc.SemanticName;

lElementDesc.SemanticIndex = lParamDesc.SemanticIndex;

if (lStreamIsGood)

{

lStream >> lElementDesc.InputSlot;

lStream >> reinterpret_cast<unsigned&>(lElementDesc.InputSlotClass);

lStream >> lElementDesc.InstanceDataStepRate;

}

else

{

lElementDesc.InputSlot = 0;

lElementDesc.InputSlotClass = D3D11_INPUT_PER_VERTEX_DATA;

lElementDesc.InstanceDataStepRate = 0;

}

lElementDesc.AlignedByteOffset = lElementDesc.InputSlot == lLastInputSlot ? D3D11_APPEND_ALIGNED_ELEMENT : 0;

lLastInputSlot = lElementDesc.InputSlot;

if (lParamDesc.Mask == 1)

{

if (lParamDesc.ComponentType == D3D_REGISTER_COMPONENT_UINT32) lElementDesc.Format = DXGI_FORMAT_R32_UINT;

else if (lParamDesc.ComponentType == D3D_REGISTER_COMPONENT_SINT32) lElementDesc.Format = DXGI_FORMAT_R32_SINT;

else if (lParamDesc.ComponentType == D3D_REGISTER_COMPONENT_FLOAT32) lElementDesc.Format = DXGI_FORMAT_R32_FLOAT;

}

else if (lParamDesc.Mask <= 3)

{

if (lParamDesc.ComponentType == D3D_REGISTER_COMPONENT_UINT32) lElementDesc.Format = DXGI_FORMAT_R32G32_UINT;

else if (lParamDesc.ComponentType == D3D_REGISTER_COMPONENT_SINT32) lElementDesc.Format = DXGI_FORMAT_R32G32_SINT;

else if (lParamDesc.ComponentType == D3D_REGISTER_COMPONENT_FLOAT32) lElementDesc.Format = DXGI_FORMAT_R32G32_FLOAT;

}

else if (lParamDesc.Mask <= 7)

{

if (lParamDesc.ComponentType == D3D_REGISTER_COMPONENT_UINT32) lElementDesc.Format = DXGI_FORMAT_R32G32B32_UINT;

else if (lParamDesc.ComponentType == D3D_REGISTER_COMPONENT_SINT32) lElementDesc.Format = DXGI_FORMAT_R32G32B32_SINT;

else if (lParamDesc.ComponentType == D3D_REGISTER_COMPONENT_FLOAT32) lElementDesc.Format = DXGI_FORMAT_R32G32B32_FLOAT;

}

else if (lParamDesc.Mask <= 15)

{

if (lParamDesc.ComponentType == D3D_REGISTER_COMPONENT_UINT32) lElementDesc.Format = DXGI_FORMAT_R32G32B32A32_UINT;

else if (lParamDesc.ComponentType == D3D_REGISTER_COMPONENT_SINT32) lElementDesc.Format = DXGI_FORMAT_R32G32B32A32_SINT;

else if (lParamDesc.ComponentType == D3D_REGISTER_COMPONENT_FLOAT32) lElementDesc.Format = DXGI_FORMAT_R32G32B32A32_FLOAT;

}

lInputLayoutDesc.push_back(lElementDesc);

}

lStream.close();

pDevice->CreateInputLayout(&lInputLayoutDesc[0], lShaderDesc.InputParameters, pBytecode->bytecode, pBytecode->size, &m_layout);

lVertexShaderReflection->Release();

}

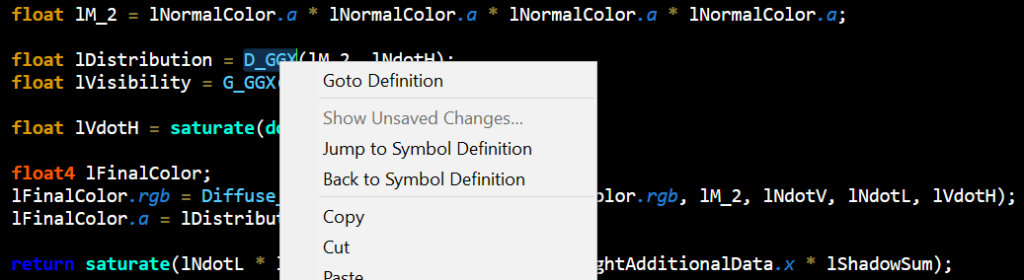

Now, some places where I differ from Bobby’s implementation.

The easiest is the AlignedByteOffset. He keeps track of how many bytes each parameter of the input struct takes up to calculate this as he goes. However, the first element of a given input slot is always zero, and any following element in the same input slot can be given D3D11_APPEND_ALIGNED_ELEMENT and get the correct result. A small difference, and his version works, but this is just simpler code and less prone to ever potentially being a headache. Also, I suppose that I’m making some assumptions here that you’re not doing weird packing on your input structs that could otherwise break my code, but it’d also break Bobby’s so I don’t feel too bad about it. You’ll also notice my lLastInputSlot variable, and how it starts at 900 and probably think that’s weird. The API documentation says that valid values for input slots are 0 – 15, and I needed a value that ensures that the first element in slot 0 properly gets an offset of 0, so this was a way to do that. Any value > 15 would work. I picked 900 for no good reason.

Now we start to get into the territory that infuriates me about D3D shader reflection. And the worst part is that I understand why this limitation exists, and I understand it’s reasonable for this to be the way it is, but I’m mad that I can’t write code that fully automates this process and allows me to be as lazy as I want to be. I am referring to the unholy trio of InputSlot, InputSlotClass, and InstanceDataStepRate. These are related fields, and if you don’t ever do anything with combining multiple input streams you can safely default these to 0, D3D11_INPUT_PER_VERTEX_DATA, and 0 and live a happy, carefree life. However, if you’re doing any batching through input stream combining, this becomes a very different, and annoying story.

See, the reflection system is able to glean every other necessary piece of data from your HLSL because it directly exists in your HLSL; that’s how reflection works. However, there is nothing there to denote what input slot a parameter belongs to, if it’s per_vertex or per_instance data, or what the step rate is if it’s per_instance data. There aren’t even any optional syntax keywords to give the reflection system hints at what you want, which would be acceptable for making this work. Instead, you get nothing! So, my solution was to create a small metadata file for each vertex shader file that just denotes input slot, input slot class, and instance data step rate per input struct parameter. If you do the same thing, it’s worth noting that D3D11_INPUT_PER_VERTEX_DATA is 0 and D3D11_INPUT_PER_INSTANCE_DATA is 1.

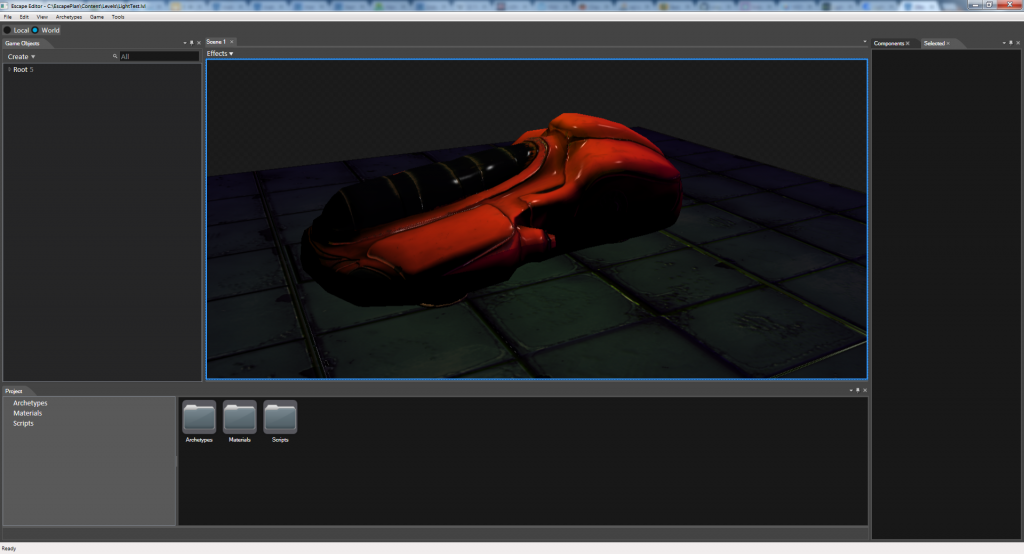

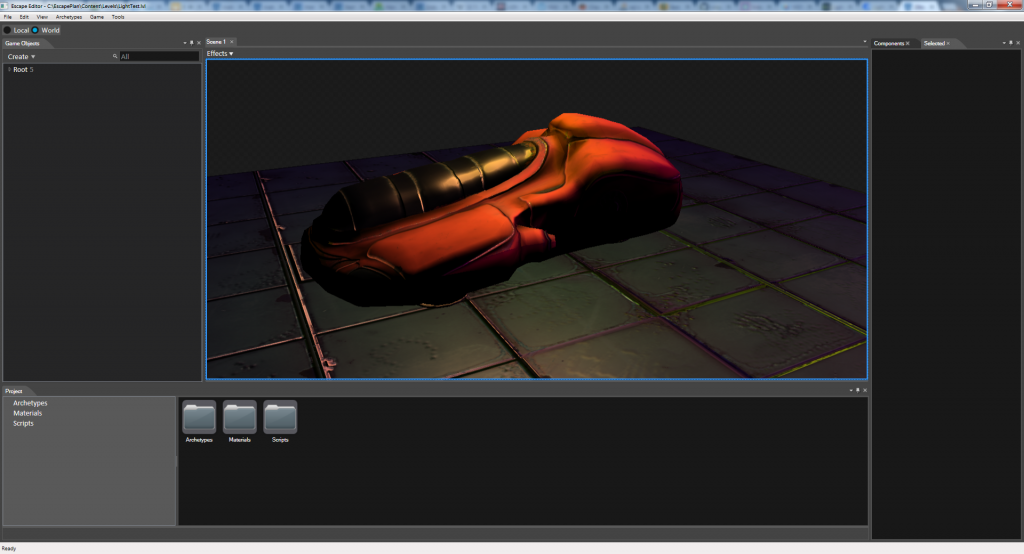

So, I no longer have to create a C++ class per shader, and my shader manager automatically handles new shaders based on additions to my shaders enum, but I do have to create this metadata file per vertex fragment. It’s sub-optimal to be sure, but definitely still a huge win over the old system. And I was able to do the whole overhaul in a day. So, if you’re in anywhere near the same boat I was, I’d definitely recommend looking into the shader reflection system.

However, there is one last caveat. It doesn’t really matter to me as far as shipping my game this year, but I could see it being a pain at some point, and probably to other people. By using the D3DReflect function, you cannot pass validation for the Windows App Store. Microsoft has an insane plan wherein you cannot compile or reflect your shaders at runtime at all. I understand the logic here, but I also can’t help but think this undermines what I think is great about the reflection system. I was able to put in relatively minimal effort and reap a huge benefit. If I wanted to bring my game “up to code” to pass app store validation, I would need to put in a lot more work to reflect my input layout into a file during compile time and then load that during runtime. It’s not undoable by any means, but it really forces you to dive into the deep end or not at all as far as the tools they’re providing here. I guess I’m just not a fan.

Anyway, that’s that. Hopefully you found something in all of this useful. And at some point soon, I really will write about my finished multithreaded renderer. But there may be a post or two before that happens. We’ll see.