I’m just going to jump into things and not really explain why there’s been a 4 month gap since the last post. It should be pretty sufficient to just say… school. However, I now have a lot of stuff to post about, so I’m hoping that means a lot of posts in quick succession. And I think a lot of them are going to be a lot more directly technical in nature, complete with code samples. We’ll see how that actually shakes out.

So, as mentioned in my last post, one of the things I wanted to do this year is move towards a more physically based system for rendering. Now, I might have pipe dreams of implementing global illumination and a full microfacet model and really doing this thing right, but let’s be realistic. I only get so much time around the rest of my classes and staying sane, and it can’t all be spent on my lighting pipeline. I have to deliver features and tools so my team can make the game we’re making, and when it comes down to it our game could ship just fine on last year’s lighting model if it had to. Luckily, short of implementing CryEngine there were a number of much smaller things I could accomplish that made significant impact to both the visual fidelity of the system as well as the ability for artists to more properly represent a wider range of materials. The first of these was to change the distribution function for my specular calculation.

Now a little back story. Over the summer, Blizzard sent all of their graphics programmers to SIGGRAPH, and they were gracious enough to have that include me despite just being an intern. While there, I attended the Physically Based Shading course, and it presented me with a lot of information that I hadn’t necessarily previously considered, but which made a lot of sense as soon as I saw it; it was basically my experience at GDC last year, all over again. All of the talks brought a lot of useful information, but Brian Karis’ talk about UE4’s rendering system ended up being the true catalyst of that morning. I did a lot more research on my own and ended up replacing my Blinn distribution function with GGX, and the results were pretty astonishing despite not adopting any other part of the Cook-Torrance microfacet model. This change also rendered the specular texture completely unnecessary; I ended up repurposing those channels to store material properties like roughness, and it opened up a lot more possibilities as far as having varied materials across a single model.

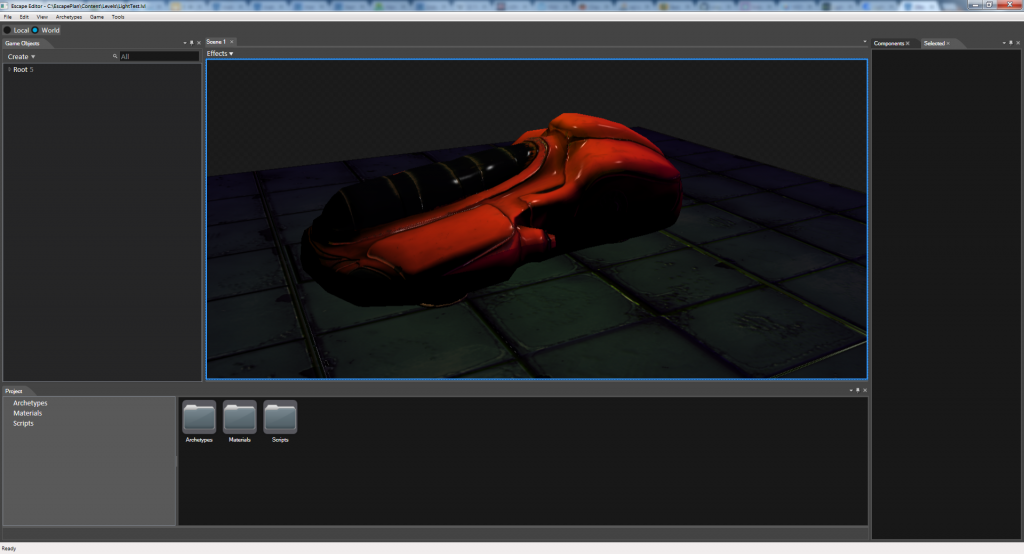

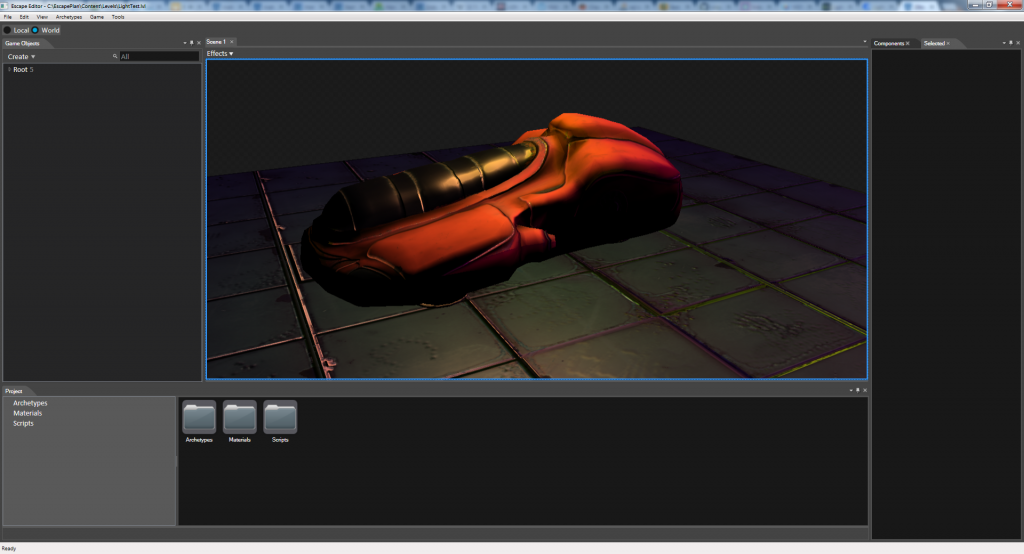

Here is a before and after comparison of the distribution functions:

Now, as a programmer, I am perfectly capable of designing and implementing this system. However, I am no artist, and Photoshop is definitely not my friend. So, the results here are based on very terrible texture manipulation by me, and you might be scratching your head over how much of an improvement this really was. But I still think it proves my point well enough. In the top image, the entire car looks plasticy, which is pretty common for Blinn. In the bottom, the highlight tails vary, but overall feel much warmer. The yellowness of the near pointlight also has more effect on the objects in the scene in the GGX version. And due to storing material properties in textures, the GGX version is also able to present the canopy as shiny while the body is much more dull.

So, while this is certainly still not a fully physically based system, I was able to make significant improvements relatively quickly, and maintain near equal computational complexity. I call that a win. Next time, I plan to delve into my finished multithreaded renderer, and talk about how incredibly wrong some elements of the code I previously posted in regards to it were. So, it’ll be fun. Especially for me. Look forward to it!