I stay pretty busy at DigiPen, and especially so a week out from gold milestone. Despite that, I still try to play SOME games; staying current with what’s going on in the industry and staying sane in the face of a hectic work schedule. After getting home from GDC, I promptly bought Tomb Raider (not quite finished, but expect a review soonish) and Bioshock Infinite despite what a terrible idea that was for productivity I just completed Bioshock this weekend, and I felt pretty strongly about the ending, so I thought I’d write about it. So, if you haven’t beaten the game yet this is your warning. SPOILERS AHEAD!

So, overall, I really liked the game. I think that it took the mechanics and themes and ideas from the previous games and really pushed them in a direction that was interesting. I found Bioshock 2 to be decent but pretty lackluster of a sequel compared to the original. It would have been easy for Infinite to be more of the same, but it wasn’t. I felt more compelled by the story in this game than I did in the first Bioshock. And despite being an FPS, Bioshock is really all about the story in my mind. Which brings me to the ending…

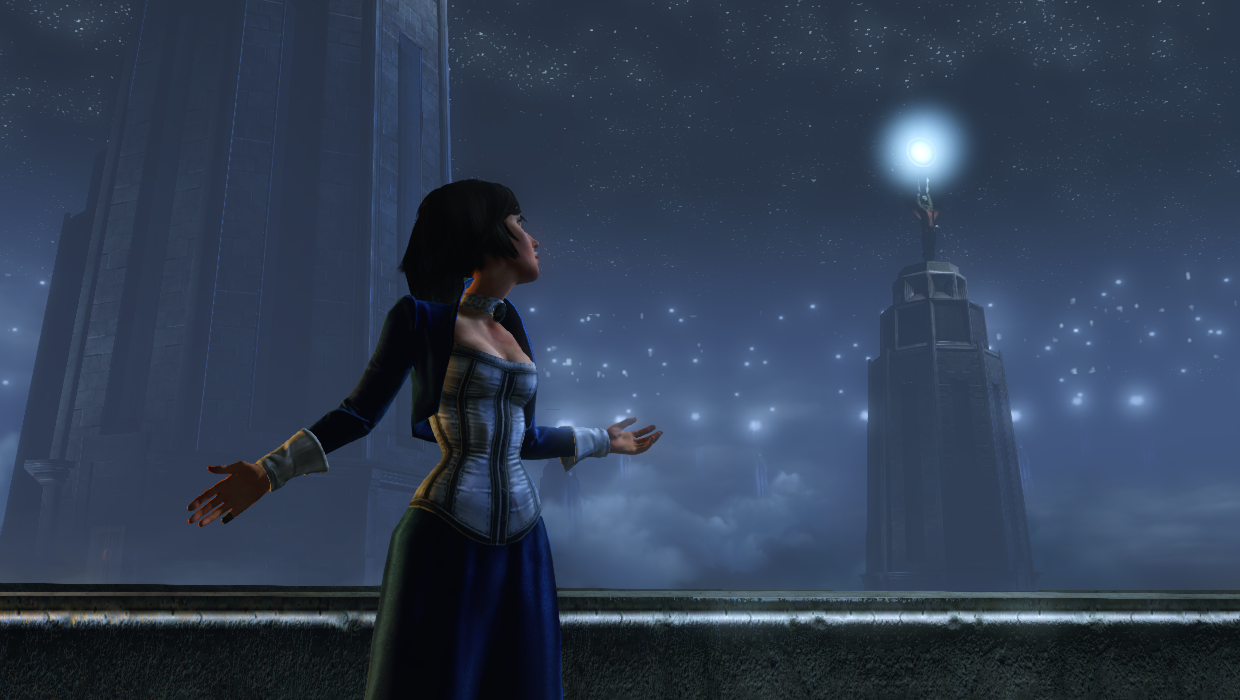

I think that this is going to be a pretty unpopular opinion, but I absolutely hated the very end of this game. The idea of a multiverse of this game world was really intriguing and I honestly didn’t fully see it coming that Elizabeth was Anna. So, even up to the point that we’re wandering the infinite lighthouses, I was still hooked. But then, out of nowhere, at the very end we get Butterfly Effect’ed. Seriously? We spend the whole game building a relationship with these characters, getting to know them, building empathy for them. Irrational took a lot of care to put tons of emotion into Elizabeth’s facial expressions and to give her a range of unique interactions throughout the world to really flesh out her personality. And, while it sucks, to have a scenario where one or both characters get killed tragically… that happens in good stories sometimes; throw a wrench into the works. But to just blink both characters, and this entire, rich universe, out of existence just angers me so much. And the worst part is that it’s because I would totally love to play another game in Columbia with Elizabeth in tow.

Maybe it’s for the best. Rather than make a sequel to this game that falls flat like Bioshock 2, maybe we’ll get yet another great setting like Columbia and yet another great set of characters like Booker and Elizabeth. Maybe. I don’t know. It feels a little like how I imagine those crazy people that are still angry at Bioware felt about the ending of Mass Effect 3. Except that I’m not an insane zealot. Irrational obviously knows what they’re doing most of the time. Regardless, in the short term, the end of this game left me feeling really unsatisfied. Maybe with more time to reflect I’ll change my opinion, but for right now, it’s an amazing game that ends with a whimper.