Last time I said I would be talking about my work on terrain and some errors I ran into over the course of developing my framework for this year. So, surprisingly, that’s what’s about to happen.

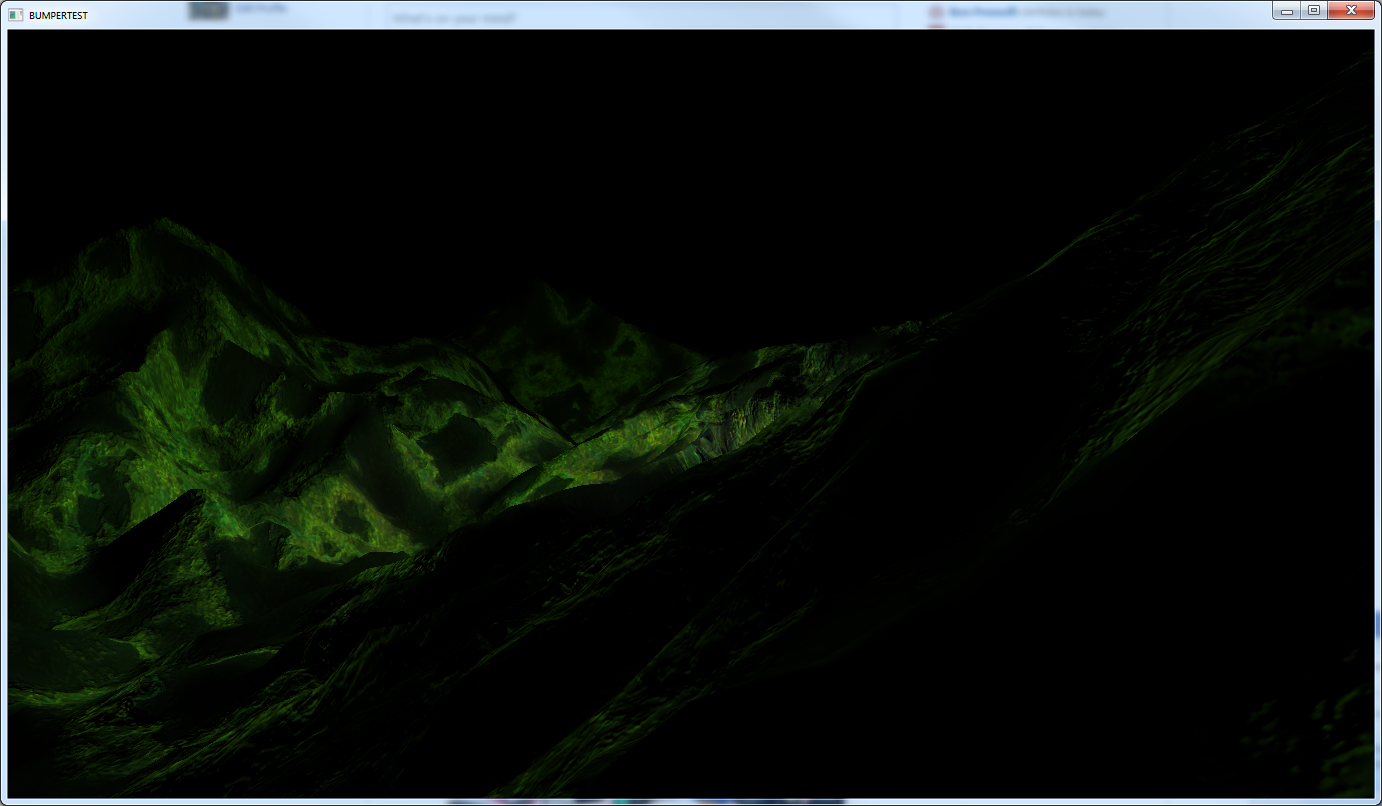

I’ll talk about terrain first because it’s visually more appealing (and I actually remembered to take screenshots of it!) and also likely infinitely less boring to people that aren’t me. Right now I’m taking a fairly traditional approach of utilizing a heightmap to generate a 2D array of vertices, and decoding the color values of each pixel into height values. I’m still early into my implementation, so things are very basic but functional. Here’s a screen capture:

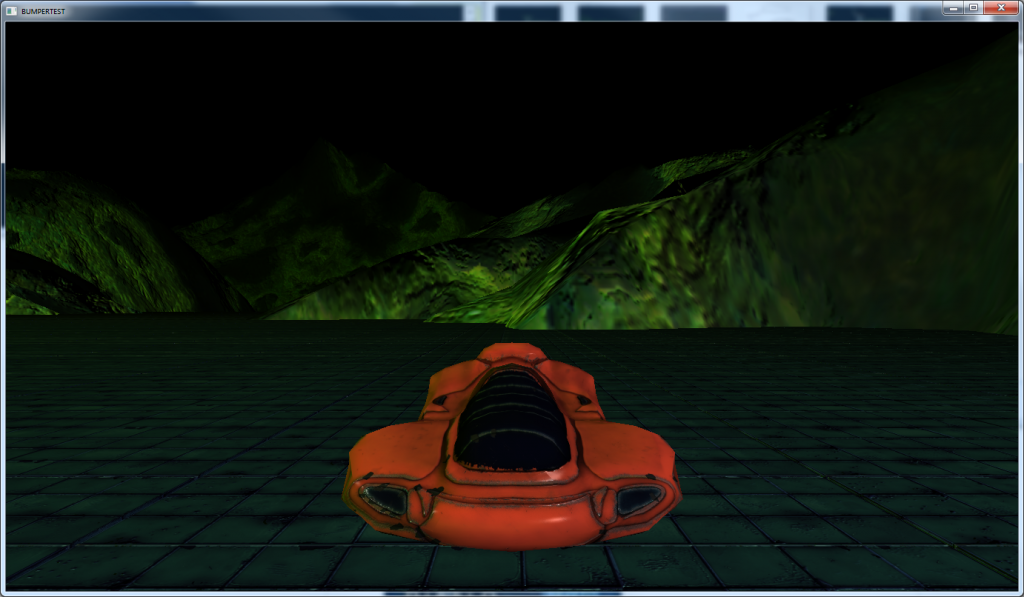

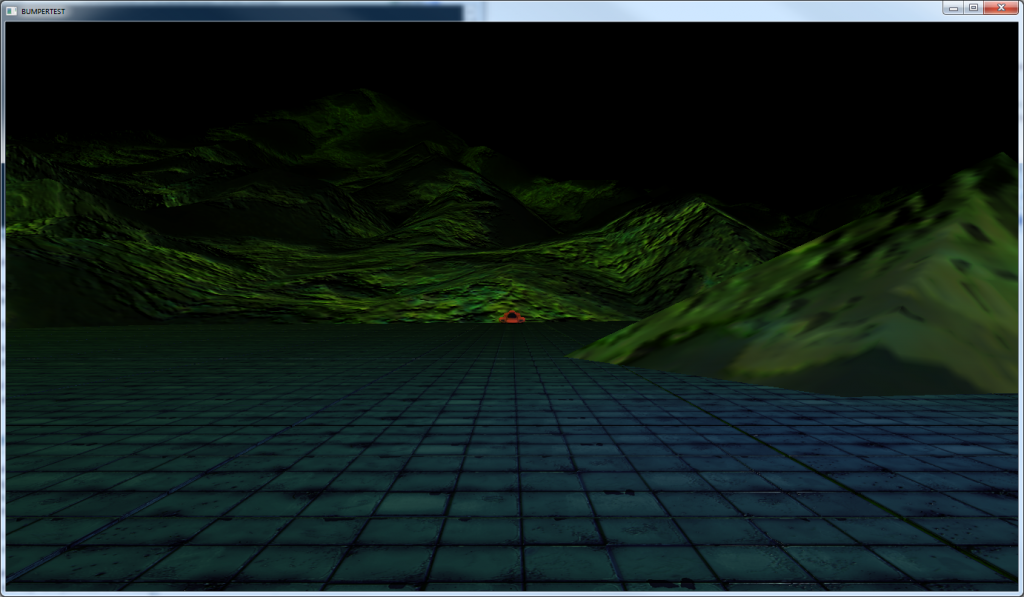

So, again, I’m still very early into my implementation. That image represents approximately 3 days worth of work. I still have a lot of work to do in spatial partitioning, LOD, and blending different detail maps to get something that looks and performs as well as I need it to. I also found an Nvidia sample that utilizes the tessellation hardware to great effect that I want to try to incorporate. However, right now I’m able to generate 10 square kilometers worth of game world terrain and maintain over 60fps so I’m not unhappy with how things are going right now. To get a sense of the scale, here are some images:

Now, unfortunately these screenshots have different terrains in them and it kind of ruins the effect of what I was trying to illustrate. But, the movement of the terrain is minuscule as the car moves out of view into the far distance. I would have retaken them to fix this inconsistency, except I’ve made dramatic changes to the lighting model that I want to save for my next post. Sorry!

Now, unfortunately these screenshots have different terrains in them and it kind of ruins the effect of what I was trying to illustrate. But, the movement of the terrain is minuscule as the car moves out of view into the far distance. I would have retaken them to fix this inconsistency, except I’ve made dramatic changes to the lighting model that I want to save for my next post. Sorry!

And that brings me to the other issue I wanted to talk about; errors in the framework. Some of them are caused by subtle problems but are still obvious. For example, setting the depth function to D3D11_COMPARISON_EQUAL can be a great way to re-use depth information and eliminate pixel overdraw on subsequent material passes. However, if one pass multiplies the viewprojection matrix against the world matrix on the cpu, and another does this on the gpu, you will not get the result you want because of differing precision. It seems obvious as I type it, but it took me about 30 minutes to realize what was going on. But at least it was incredibly obvious that something WAS wrong because the output was broken.

Things get tougher when you get a continuous output from your error and you don’t realize anything is wrong, and this can happen a lot in graphics. A good example is depth data usage in my lighting and postprocessing. The image in my last post was actually completely incorrect, but I didn’t realize it when it was initially taken. Tweaking some data lead me to realize that moving the camera far enough away caused illumination to zero out, which makes no sense. But for a large range of distances it seemed correct, so it took me a while to even realize there was a problem. A good way to simulate this (but wasn’t my actual problem) is to bind the active depth buffer as the shader resource view my lighting is sampling, ensuring I get an all black texture. That’s obviously wrong, but the output you get can seem right within the right data set. This problem also manifested itself in my depth of field effect. When I first implemented it, I happened upon values that worked against my incorrect depth data. It wasn’t until I started tweaking focal distance and width that I realized something was very wrong.

Those problems might be specific to me, but I think the real takeaway here is that when you implement something new in graphics, you really need to test a wide range of data to ensure your effect is really doing what you want, and not doing it by circumstance. If you don’t, a fundamental error can persist in your code base for a long, long time without anyone realizing, and it makes it much harder to add new features when basic code additions don’t do what you should reasonably expect them to.

So, that’s it for this time. I wish I’d taken some screenshots of output of the errors I talked about, but I think the information is still pretty worthwhile without pretty pictures. Next time (hopefully soon), I’ll talk about the start of my move to a physically based rendering model, beginning with replacing the specular reflection model.